Explaining Docker in Front-End Terms

We, front-end developers, are used to dealing with buzzwords and the ever-increasing number of technologies to learn. For years, we’ve been bombarded with library after library — and each of these is combined with numerous frameworks with their contradicting approaches.

If you’ve been in the industry for more than a couple of years, odds are your skin has already started getting thicker from all the fancy words the industry is throwing at us. We hear about Docker, Kubernetes, containerization, and all the others. They all sound like pretty complicated concepts but don’t feel intimated. In this article, I’m going to explain the one you hear the most.

This article is for front-end developers who want to learn what the fuss with Docker is all about and would like to see how they can utilize Docker to improve their daily work.

I don’t expect you to have more knowledge than any average front-end developer would. Mind you, this article is more of a theoretical explanation of Docker’s main features and use cases rather than a practical tutorial about how to implement them.

Terminology

Let’s start with a quick round of terminology before I start explaining everything in detail.

-

Container: A container is a standard unit of software that packages up code — and all its dependencies — so the application runs quickly and reliably from one computing environment to another.

-

Image: An image is an unchangeable, static file that includes executable code — and all its dependencies — except the operating system. When an image is executed, it creates containers that run the code inside the image using the files inside that image.

-

Containerization: The process of encapsulating executable code inside containers and running those containers in a virtual environment, such as the cloud.

Docker is a containerization solution, so we’ll need to start by explaining what containers are and how they work in detail.

So What Are Containers Anyway?

You can think of a container as a kind of a virtual machine or an iframe. Much like an iframe, a container’s purpose is to isolate the processes and code executions inside it from external interference.

In the front-end world, we use iframes** **when we want to isolate external resources from our website for many reasons. Sometimes this is to ensure that there isn’t any unwanted clash of CSS or JavaScript execution; other times it is to enforce a security layer between the host and the imported code.

For example, we place advertisement units inside iframes because they’re often built by separate teams, or even separate companies, and deployed independently from the team that manages the host website. In such cases, it is nearly impossible to manage CSS and JS clashes between the two sides.

Another use case would be to enforce security. The PayPal button you see below is placed in an iframe to ensure that the host website cannot access any information you have on your PayPal account. It cannot even click that button for you. So even if the website you’re paying is hacked, your PayPal will be safe as long as PayPal itself is safe.

Docker’s initial use cases are the same. You get to isolate two apps from each other’s processes, files, memory,and more, even if they’re running on the same physical machine. For instance, if a database is running inside a Docker container, another app cannot access that database’s files unless the database container wants it to.

So a Docker container is a virtual machine?

Kind of — but not exactly.

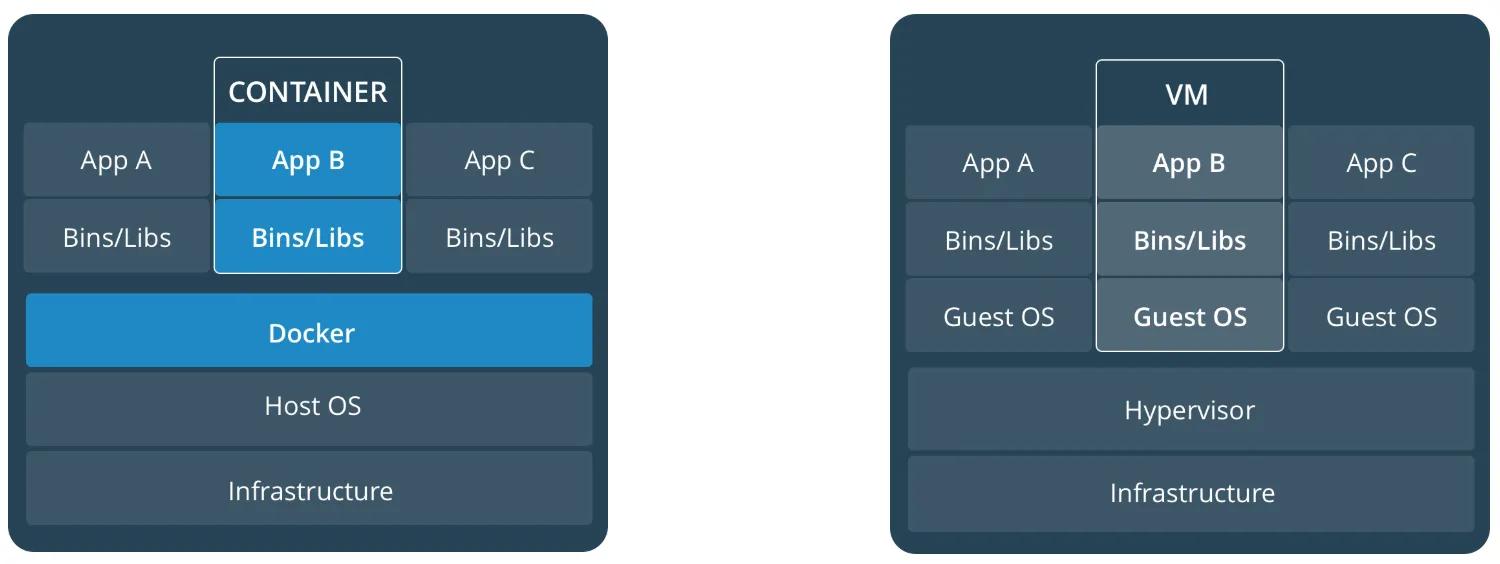

Virtual machines run their own operating systems. This allows you to run macOS, Linux, and Windows all on the same computer, which is amazing but not very performant since the boundaries of these operating systems have to be very precisely defined to prevent possible conflict.

But for most intents and purposes, the containers don’t need completely separate operating systems. They just need isolation.

So what Docker does is use kernel-level isolation on Linux to isolate the resources of an app while giving it the functionalities of the underlying operating system. Containers share the operating system but keep their isolated resources.

That means much better resource management and smaller image sizes. Because once you leave the resource management to Docker, it ensures the containers do not use more RAM and CPU than they need, whereas if you used a virtual machine, you would need to dedicate a specific amount of resources to virtual machines, whether they always use them or not.

There we go: We now know the basics of what Docker is and what Docker containers are. But isolation is just the start. Once we get these performant and isolated containers and a powerful resource manager (Docker) to manage them, we’re able to take it the next step.

Reproducible Containers

Another thing Docker does very well is to give us a way to declaratively rebuild our containers.

All we need is a Dockerfile to define how Docker should build our containers, and we know that we’ll get the same container every time, regardless of the underlying hardware or the operating system. Think about how complicated it is to implement a responsive design across all the desktop and mobile devices. Wouldn’t you love it if it was possible to define what you need and get it everywhere without a headache? That’s what Docker is trying to accomplish.

Before we go into a real-life use case, let’s quickly go over Docker’s life cycle to understand what happens when.

A Docker container’s life cycle

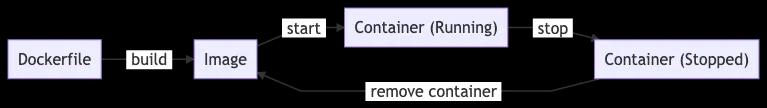

It all starts with a Dockerfile that defines how we want Docker to build the images that the containers will be based on. Note the flow below:

Docker uses Dockerfile to build images. It fetches the files, executes the commands, does whatever is defined in the Dockerfile, and saves the result in a static file that we call an image. Docker then uses this image and creates a container to execute a predefined code, using the files inside that image. So a usual life cycle would go like below:

Let’s unwrap this with a real use case.

Running tests on continuous integration (CI)

A common use case for Docker in front-end development is running unit or end-to-end tests on continuous integration before deploying the new code to the production. Running them locally is great when writing the code, but it is always better to run them on an isolated environment to ensure that your code works everywhere regardless of the computer setup.

Also, we all have that one teammate who always skips the tests and just pushes the code. So a CI setup is also good to keep everyone in check. Below is a very basic container setup that will run your tests when you run the container:

gist:ozantunca/5870e33d4d6b0f5997b8403fb1cdfb49#Dockerfile

Let’s go over the commands there to understand what is happening.

FROM is used for defining a base image to build on. There are a lot of images already available in the public Docker registry. FROM node:12 goes to the public registry, grabs an image with Node.js installed, and brings it to us.

COPY is used for copying files from the host machine to the container. Remember that the container has an isolated file system. By default, it doesn’t have access to any files on our computer. We run COPY . /app to copy the files from the current directory to the /app directory inside the container. You can choose any target directory. This /app here is just an example.

WORKDIR is basically the cd command we know from UNIX-based systems. It sets the current working directory.

RUN is quite straight forward. It runs the following command inside the container we’re building.

CMD is kind of similar to RUN. It runs the following command inside the container as well. But instead of running it on build time, it runs the command in run time. Whatever command you provide to CMD will be the command that’s going be run after the container is started.

This is all it takes for our Dockerfile to build the template of a container that will set up a Node.js environment and run npm test.

Of course, this use case is just one of the many use cases containers have. In a modern software-architecture setup, most server-side services either already run inside containers or the engineers have plans to migrate to that architecture. Now we’re going to talk about perhaps the most important problem these images help us solve.

Scalability

This is something we front-end developers often overlook. That’s because even though the back-end code runs on only a few servers for all the users, the code we write runs in a separate machine for every user we have. They even buy those machines (personal computers, smartphones, etc.) that they run our code on. This is an amazing luxury that we front-end developers have that the back-end developers do not.

On the server-side of things, scalability is a real issue that requires a lot of planning over the infrastructure architecture and the budget. Cloud technologies made creating new machine instances a lot easier, but it’s still the developer’s job to make their code work on a completely new machine.

That’s where our consistently reproducible containers come in handy. Thanks to the image Docker has built for us, we can deploy as many containers as we want (or we can afford to pay for). No more creating a new virtual machine, installing all the dependencies, transferring the code, setting the network permissions, and many more steps we used to take just to get a server running. We already have all of that done inside an image.

Conclusion

Docker has certainly revolutionized the way we develop and deploy software in the last few years. I hope I’ve been able to shed a light upon the reasons for its popularity.

Containerization and the mindset it brought with it will, without a question, continue to impact how we build software in the upcoming years.